Cloud hosting Sitecore - Disaster Recovery

In my previous posts in this series, I looked at how you can use the global reach and deployment automation capabilities of the cloud to provide Sitecore installations that support users located at various points around the globe without compromising on the analytics and personalisation capabilities of the Sitecore platform. In this post I look at how you can use the benefits of the cloud to provide automated DR failover for your existing on-premise hosted website.

Cloud hosting Sitecore - Cloud development patterns

This example shows only failover for the web environment, in a more complex scenario other systems that the web site relies on must also be able to fail over, or the site must be built to be resilient to outages of integrated systems that do not have the same level of failover flexibility.

To provide a disaster recovery service for you on premise hosted Sitecore instance, you will need to add a second Azure Traffic Manager instance and adjust its configuration to use the Failover balancing method and add the external endpoint of the primary site. Currently this can only be performed using PowerShell or the REST API, not via the portal console.

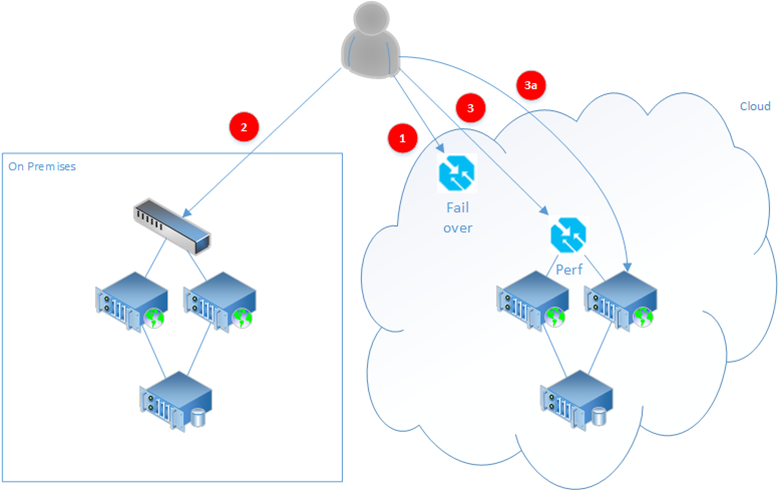

The diagram below shows the new Failover Traffic Manager (1) to which the primary DNS record of the website is directed. This routes all requests for the website through the Failover Traffic Manager, which is configured with its first endpoint pointing to the on premises environment (2). In failover configuration the order that the endpoints are listed determines their failover priority.

The on premises environment is set up in exactly the same way that it would function today, with a local load balancer responsible for providing high availability within this primary location.

If the Failover Traffic Manager endpoint detects that the endpoint pointing to the on premises environment has stopped responding, it will instead route all traffic through to the second listed endpoint, which in this example is the traffic manager that was set up by the Sitecore Azure module (3). This will in turn choose one of the cloud service instances running the Sitecore site and redirect the user to that (3a).

Setting this up with Azure is actually a little complex, as you can't add a non-Azure endpoint to the traffic manager through the portal. Instead you have to use PowerShell

In this example I will set up a separate DR failover Traffic Manager instance using the Failover balancing method. I then save the created profile into a PowerShell variable for further manipulation in subsequent commands.

The monitoring path I've specified here is actually installed as a part of the Sitecore Azure module, so if you aren't using this module you will need to define your own monitoring file to determine whether the site is functioning correctly. A HTTP 200 status is expected to denote that all is well, a 500 for an issue.

Then we add an endpoint that points to our onsite Sitecore instance, this will direct traffic to my onsite site by default. Endpoints other than cloud services or web sites, including those not located within Azure, need to be defined as the “Any” endpoint type.

Finally we add an endpoint for the failover location in the Azure cloud, and then commit the updated Traffic Manager configuration using the Set-AzureTrafficManagerProfile cmdlet.

In this instance I’m adding an endpoint which refers to another Traffic Manager instance in Azure which is providing geographic load balancing. I could just refer to a cloud service delivery hosting farm using the Type parameter CloudService.

When adding a DNS record, we can use the Failover routing policy to return a primary record when an associated health check fails. In this example I’ve created a CNAME record for www.testdomain.com which points to my primary onsite environment (onsite.testdomain.com). In the routing policy for the record I configure this to be the primary location and set up a health check which will regularly request the Sitecore healthcheck page from the environment and only return the primary DNS record whilst that health check is passing.

A second DNS record for www.testdomain.com is also created pointing to my cloud hosted farm with the “Secondary” failover record type specified. When the health check on the primary fails, this record will be presented instead.

As you can see, this scenario can actually be configured a lot more simply in the AWS portal than in Azure.

Other posts in this series

Cloud hosting Sitecore - Serving global audiencesCloud hosting Sitecore - Cloud development patterns

Pattern 4: Disaster Recovery

Sometimes you don't need to have Sitecore running actively at multiple locations, and perhaps you are already providing hosting on premises and only want to use the cloud to provide a standby service in the event of an outage of the primary hosting environment.This example shows only failover for the web environment, in a more complex scenario other systems that the web site relies on must also be able to fail over, or the site must be built to be resilient to outages of integrated systems that do not have the same level of failover flexibility.

Microsoft Azure

The Azure traffic manager can be used to facilitate this architecture as well, as it can distribute traffic not only between Azure cloud services, but can route traffic to any DNS endpoint including ones located outside of Azure.To provide a disaster recovery service for you on premise hosted Sitecore instance, you will need to add a second Azure Traffic Manager instance and adjust its configuration to use the Failover balancing method and add the external endpoint of the primary site. Currently this can only be performed using PowerShell or the REST API, not via the portal console.

The diagram below shows the new Failover Traffic Manager (1) to which the primary DNS record of the website is directed. This routes all requests for the website through the Failover Traffic Manager, which is configured with its first endpoint pointing to the on premises environment (2). In failover configuration the order that the endpoints are listed determines their failover priority.

The on premises environment is set up in exactly the same way that it would function today, with a local load balancer responsible for providing high availability within this primary location.

If the Failover Traffic Manager endpoint detects that the endpoint pointing to the on premises environment has stopped responding, it will instead route all traffic through to the second listed endpoint, which in this example is the traffic manager that was set up by the Sitecore Azure module (3). This will in turn choose one of the cloud service instances running the Sitecore site and redirect the user to that (3a).

Setting this up with Azure is actually a little complex, as you can't add a non-Azure endpoint to the traffic manager through the portal. Instead you have to use PowerShell

In this example I will set up a separate DR failover Traffic Manager instance using the Failover balancing method. I then save the created profile into a PowerShell variable for further manipulation in subsequent commands.

New-AzureTrafficManagerProfile -Name DRFailover -DomainName examplefailover.trafficmanager.net -LoadBalancingMethod Failover -MonitorProtocol Http -MonitorPort 80 -MonitorRelativePath "/sitecore/service/heartbeat.aspx" -Ttl 30

$profile = Get-AzureTrafficManagerProfile -Name DRFailover

The monitoring path I've specified here is actually installed as a part of the Sitecore Azure module, so if you aren't using this module you will need to define your own monitoring file to determine whether the site is functioning correctly. A HTTP 200 status is expected to denote that all is well, a 500 for an issue.

Then we add an endpoint that points to our onsite Sitecore instance, this will direct traffic to my onsite site by default. Endpoints other than cloud services or web sites, including those not located within Azure, need to be defined as the “Any” endpoint type.

Add-AzureTrafficManagerEndpoint -TrafficManagerProfile $profile -DomainName onsite.deloittedigital.com -Status Enabled -Type Any

Finally we add an endpoint for the failover location in the Azure cloud, and then commit the updated Traffic Manager configuration using the Set-AzureTrafficManagerProfile cmdlet.

Add-AzureTrafficManagerEndpoint -TrafficManagerProfile $profile -DomainName myexample.perf.trafficmanager.net -Status Enabled -Type Any

Set-AzureTrafficManagerProfile $profile

In this instance I’m adding an endpoint which refers to another Traffic Manager instance in Azure which is providing geographic load balancing. I could just refer to a cloud service delivery hosting farm using the Type parameter CloudService.

Amazon Web Services

AWS Route 53 DNS hosting can be used to monitor the availability of a primary data centre hosting location, and automatically fail over to a second location if it becomes unavailable. This is essentially the same process as outlined with the Traffic Manager approach above.When adding a DNS record, we can use the Failover routing policy to return a primary record when an associated health check fails. In this example I’ve created a CNAME record for www.testdomain.com which points to my primary onsite environment (onsite.testdomain.com). In the routing policy for the record I configure this to be the primary location and set up a health check which will regularly request the Sitecore healthcheck page from the environment and only return the primary DNS record whilst that health check is passing.

A second DNS record for www.testdomain.com is also created pointing to my cloud hosted farm with the “Secondary” failover record type specified. When the health check on the primary fails, this record will be presented instead.

As you can see, this scenario can actually be configured a lot more simply in the AWS portal than in Azure.

Other considerations

Disaster Recovery is important for business critical operations, but it usually comes at a significant cost to the business as it involves trading off having a second environment ready in the unlikely case that the primary environment becomes wholly unavailable. Depending on the Recovery Time Objective (RTO) specified for the system, this can mean having an entire hosting environment sitting idle for most of the time as a warm standby.

Having your DR environment in the cloud can provide you a significant benefit in this area, as you can potentially choose to have only a minimal number of servers provisioned -- for instance just enough to be able to publish your content to, and then use the auto-scaling capabilities of your chosen cloud platform to scale up the number of delivery servers that are needed once load is applied to increase the CPU usage of the skeleton delivery server farm.

Having your DR environment in the cloud can provide you a significant benefit in this area, as you can potentially choose to have only a minimal number of servers provisioned -- for instance just enough to be able to publish your content to, and then use the auto-scaling capabilities of your chosen cloud platform to scale up the number of delivery servers that are needed once load is applied to increase the CPU usage of the skeleton delivery server farm.

Up next...

In the final installment of this series, we'll have a look at some of the key software design decisions that you need to consider when you are planning your cloud deployment to ensure that your site will run properly on a public cloud platform.

Cloud Disaster Recovery in 2025: Your Best Defense Against Downtime!

ReplyDeleteWhat is Cloud Hosting? Benefits and Uses